Battery market development encounters the high and low temperature bottleneck

Amid the global wave of energy transition, the battery industry is expanding at an unprecedented pace. In 2025, China's sodium-ion battery production increased by 96% year-on-year, and the output of cathode materials for sodium-ion batteries more than doubled. Concurrently, the wide-temperature-range battery market is experiencing rapid growth. In 2025, the market size for high and low temperature batteries in China reached 18.73 billion RMB, a year-on-year increase of 24.6%, and is projected to grow to 22.98 billion RMB by 2026. However, beneath this prosperity lies a core contradiction that demands urgent resolution: the extreme sensitivity of batteries to temperature.

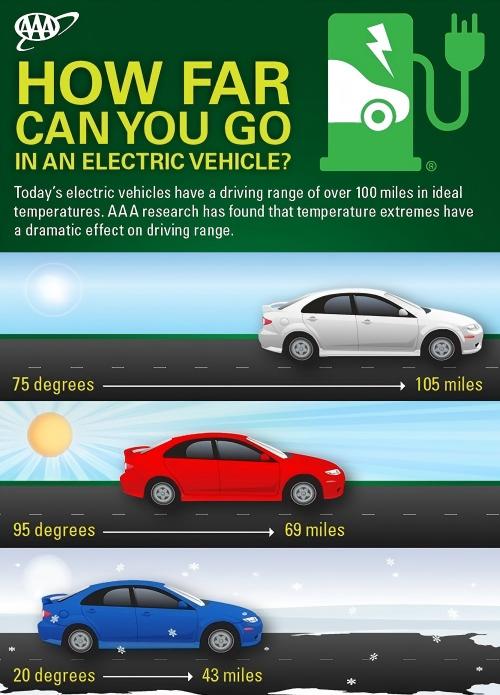

In high-latitude regions, winter temperatures can drop below -40℃, while in low-latitude areas, summer surface temperatures can exceed 50℃. In this real-world environment characterized by vast temperature differences, traditional lithium-ion batteries (with an operating range of 0-40℃) experience significant performance degradation: at low temperatures, electric vehicle range plummets and charging becomes difficult; at high temperatures, lifespan shortens and may even trigger thermal runaway. Although sodium-ion batteries have emerged as promising contenders due to their excellent low-temperature performance—CATL's sodium-ion battery maintains a capacity retention rate of over 90% in extreme cold conditions of -40℃ and can still discharge at -50℃—their energy density limitations prevent them from fully replacing lithium batteries. How to equip batteries with the capability to withstand both extreme cold and intense heat has become the core challenge for the industry's transition from the "laboratory stage" to the "all-climate market." The prerequisite for crossing this threshold is precisely scientific and rigorous high and low temperature testing.

Figure 1 electric vehicle range at different temperature

Solving and validating performance degradation under high and low temperature

Various improvement methods have been developed to mitigate battery performance degradation under high and low temperatures, and research into new materials and systems capable of withstanding extreme temperature scenarios has become a current research hotspot.

Mechanism analysis of performance degradation under high and low temperature

The performance degradation of batteries under extreme temperatures is essentially a comprehensive disruption of internal electrochemical kinetics.

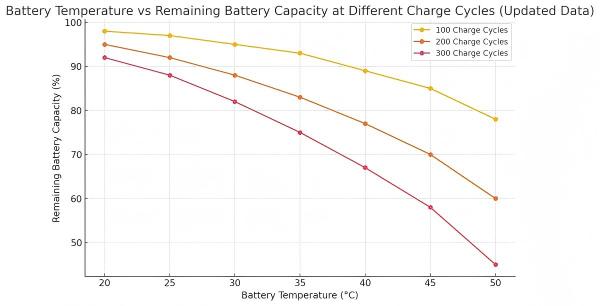

In low-temperature environments, electrolyte viscosity increases sharply while ionic conductivity plummets, directly impeding lithium-ion migration. More critically, during low-temperature charging, lithium ions struggle to intercalate into the graphite anode and are forced to reduce to metallic lithium on the anode surface, forming lithium dendrites—this not only permanently consumes active lithium but may also pierce the separator, causing internal short circuits. Concurrently, the SEI layer becomes damaged due to severe volume changes in the electrode material, exposing fresh graphite surfaces that continuously consume electrolyte and lithium ions, resulting in irreversible capacity loss.

In high-temperature environments, the challenges are fundamentally different. Side reactions accelerate exponentially: electrolyte decomposition, dissolution of transition metals from cathode materials, and excessive growth of the SEI film. These reactions continuously consume active lithium ions and electrolyte, leading to accelerated capacity degradation. More critically, high temperature is the most direct external trigger for battery thermal runaway—once an individual cell overheats, it can trigger a chain of exothermic reactions, potentially igniting the entire battery pack within seconds.

Figure 2 battery cycle capacity degradation curves at different temperatures

Methods to improve battery performance under high and low temperature

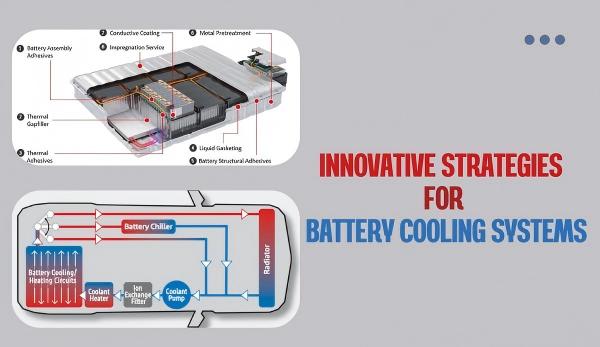

Targeting the mechanisms described above, the industry has developed a three-pronged improvement approach encompassing materials, structure, and management. At the material level, low-temperature electrolyte formulations (such as those incorporating FEC and LiFSI additives) have been developed; hard carbon/silicon-based anodes are being adopted to replace graphite for enhanced lithium diffusion rates; single-crystal cathodes are utilized to improve structural stability. For high-temperature performance, electrolyte additives that raise decomposition temperatures and suppress excessive SEI film growth are employed. At the structural level, nano-coating technologies (Al2O5, Li5PO4) stabilize the SEI film, while carbon nanotube/graphene composite conductive agents reduce electrode polarization. At the management level, low-temperature preheating combined with low-current charging strategies, along with dynamic power limiting protection under high temperatures, have become standard features in intelligent Battery Management Systems (BMS).

Figure 3 battery pack thermal management system

Examples of high and low temperature performance of new material/new system batteries

In recent years, academia has produced a number of highly promising new material systems, whose high and low temperature performance data have been validated through rigorous laboratory testing

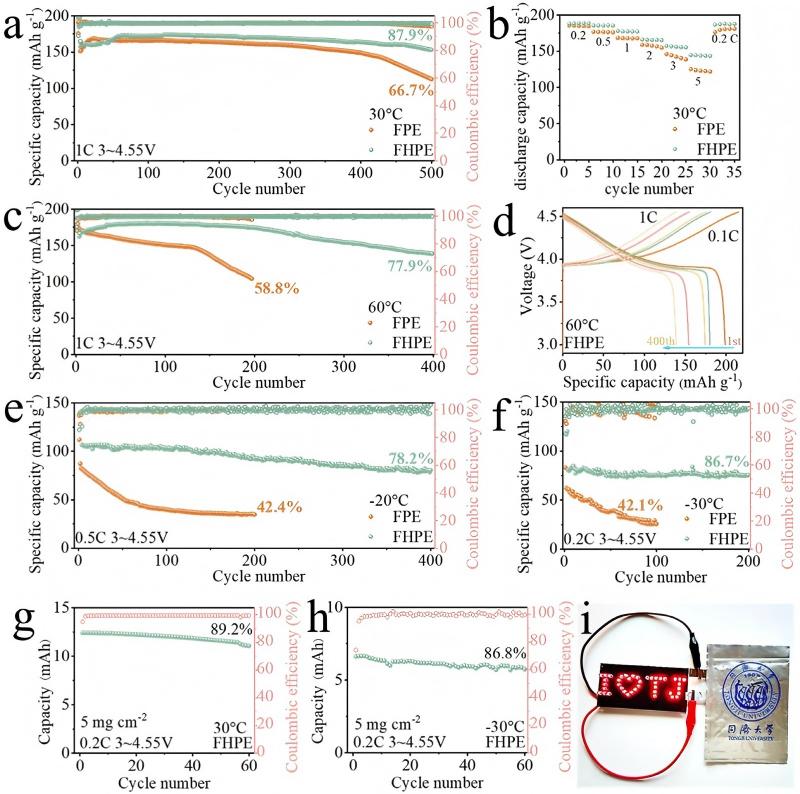

Fluorinated Hybrid Gel Polymer Electrolyte (FHPE) developed by teams from Tongji University, Shanghai Shanshan, and others: This electrolyte maintains a high ionic conductivity of 3.54×10-4 S/cm even at -30℃. Assembled Li/LiCoO2 coin cells demonstrate a capacity retention rate of 86.7% after 200 cycles at -30℃ and maintain 77.9% capacity after 400 cycles at 60℃. In nail penetration tests, the pouch cells continued to operate stably, validating the simultaneous achievement of wide temperature range operation and high safety.

Figure 4 (a-h) electrochemical performance comparison of Li/FPE/LiCoO2 and Li/FHPE/LiCoO2

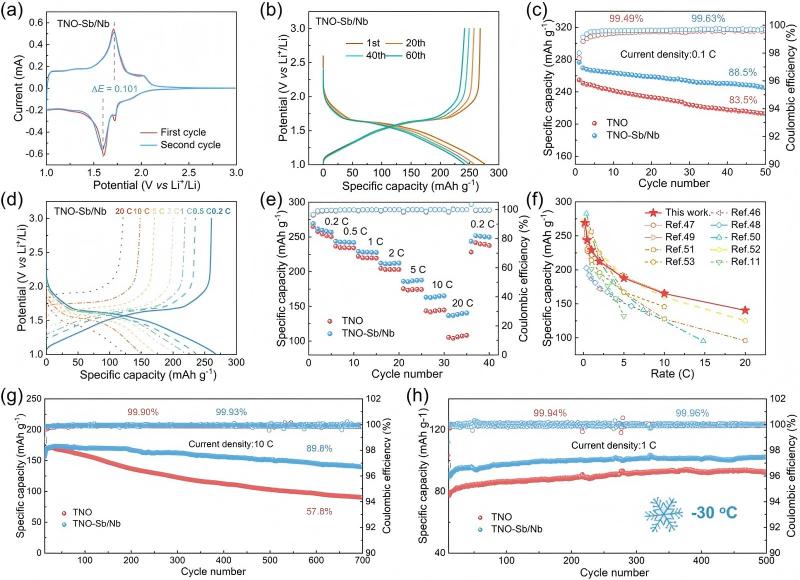

Sb/Nb Dual-Doped TiNb2O7 (TNO) Anode Material Developed by Harbin Institute of Technology: By reconstructing ion transport channels at the atomic scale through crystallographic engineering, this material maintains an intact crystal structure without cracks after 500 cycles at -30℃, achieving the unification of stable fast-charging capability and long cycle life under low-temperature environments. Furthermore, its preparation process is compatible with existing production lines.

Figure 5 (a-h) performance comparison between TNO-Sb/Nb and TNO

For these new materials to progress from "validation success" to "commercial-scale application," an indispensable step is rigorous screening through high and low temperature testing equipment—only electrochemical data obtained under controllable, precise, and reproducible extreme temperature conditions can support both academia and industry in determining whether a technology truly possesses engineering value.

Essential performance characteristics of high and low temperature testing equipment

High and low temperature testing equipment serves as the "quality inspection gateway" connecting laboratory material innovation with engineering applications. To accurately replicate the actual performance of batteries under extreme environments and ensure testing process safety, the equipment must possess a series of core performance characteristics, each corresponding to well-defined technical specifications and underlying engineering rationale.

Figure 6 characteristic diagram of integrated high and low temperature testing equipment

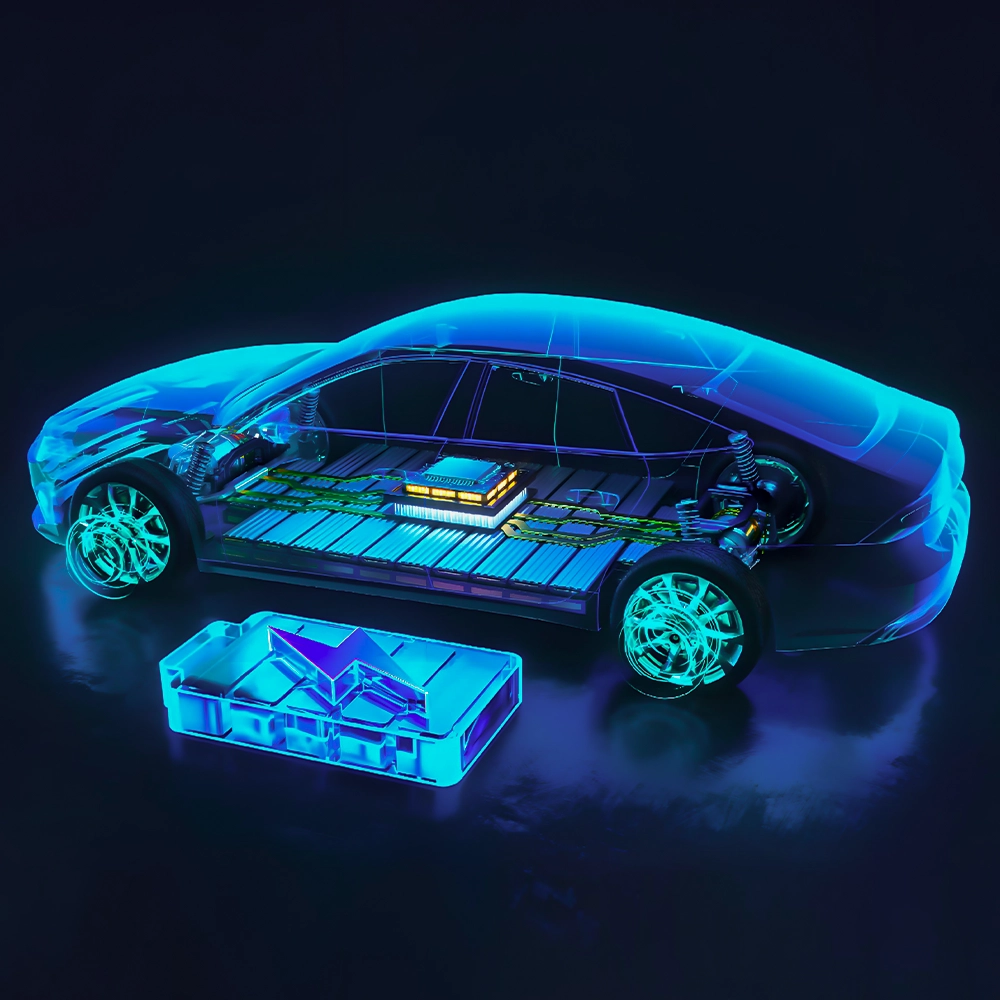

A wide temperature range is the primary fundamental performance characteristic of high and low temperature testing equipment. Technical specifications typically require the equipment to cover -70℃ to +150℃, with some customized models extendable to +200℃. This is because the practical application scenarios for batteries have expanded from everyday consumer electronics to extreme environments such as polar scientific expeditions, desert photovoltaic power generation, and deep space exploration—winter temperatures in the Arctic can drop as low as -50℃, the temperature inside an enclosed vehicle cabin exposed to summer sun can exceed 60℃, and batteries in space must withstand drastic thermal cycling ranging from -120℃ to +120℃. Only with a sufficiently broad temperature coverage capability can equipment provide rigorous validation conditions for new battery systems such as ultra-wide temperature range batteries, sodium-ion batteries, and solid-state batteries, thereby supporting the transition of technologies from the laboratory to global applications.

High temperature control precision and stability are core indicators determining the credibility of test data. The industry standard requirement is temperature control precision of ±0.1℃ and temperature fluctuation of ≤1℃. The electrochemical reactions of batteries are extremely sensitive to temperature—a temperature difference of just 1℃ can cause capacity test values to deviate by 2% to 5%, and internal resistance test values to fluctuate by more than 10%. More critically, scenarios such as new material screening and degradation mechanism research require repeated comparison of experimental data from different batches. If temperature control precision is insufficient, researchers cannot determine whether observed performance differences result from material improvements or are merely noise caused by temperature drift. Therefore, high-precision, high-stability temperature control capability serves as the foundation for obtaining reproducible, traceable, and cross-validatable scientific research data.

Battery performance testing accuracy directly determines whether the equipment can capture subtle yet critical electrochemical signals. For research and development scenarios such as coin cells, half-cells, and novel electrolyte screening, the equipment must possess current acquisition capabilities at the microamp (μA) or even nanoamp (nA) level, with voltage measurement accuracy reaching the order of ten-thousandths of a percent (e.g., 0.02% FS). This is because many new materials exhibit extremely small capacity contributions and weak polarization characteristics under extreme temperatures; if testing accuracy is insufficient, these potentially breakthrough phenomena will be completely submerged by system noise, leading to research misjudgments or missed critical discoveries. High-precision testing capability is essentially installing a high-power microscope for the "eyes" of scientific researchers.

Military-grade explosion-proof safety design is the lifeline ensuring laboratory personnel safety and property protection. Technical configurations typically include explosion-proof doors with pressure relief channels and explosion-proof observation windows, separate placement of the chamber and control system, over-temperature power-off interlocks, smoke alarms, automatic fire suppression, and sprinkler systems. During extreme temperature testing of batteries, particularly in destructive experiments such as high-temperature thermal abuse, overcharge/discharge, nail penetration, and crush tests, there are real risks of fire, explosion, and toxic gas leakage. The core logic of explosion-proof design is not to prevent accidents but to strictly contain accident hazards within the chamber—even if a battery experiences violent thermal runaway, the shock wave can be directionally released through pressure relief channels, flames are extinguished by the automatic fire suppression system, and toxic gases do not escape into the laboratory. For research institutions that work daily with high-energy-density batteries, this performance characteristic is equally important as testing accuracy; it embodies both professional ethics and technical rationality.

The adjustable temperature ramp rate capability supports dynamic testing requirements such as thermal shock and temperature cycling. Typical equipment provides a programmable ramp rate adjustment range of 1℃/min to 10℃/min. In real-world operating conditions, batteries rarely remain at constant temperatures for extended periods—when an electric vehicle drives from a warm garage into -30℃ outdoor conditions, the battery pack experiences rapid cooling within minutes; fast charging processes are accompanied by temperature fluctuations resulting from the interplay between the battery's self-heating and the cooling system. By simulating realistic temperature change rates, equipment can more accurately reproduce the performance response and degradation pathways of batteries under actual usage conditions, thereby providing input data that more closely reflects reality for thermal management system calibration and life prediction models.

Long-term operational reliability and unattended operation capability are fundamental guarantees supporting continuous cycle testing lasting weeks or even months. Technical implementations include automatic power-off memory recovery, intelligent compressor start/stop protection, and high-density polyurethane/fiberglass insulation layers. Battery cycle life testing typically requires continuous operation for thousands of hours. If equipment stops midway due to minor faults such as momentary power interruptions or compressor overheating protection without human intervention, the entire experimental sequence will be wasted, resulting in costly loss of time and samples. Therefore, only equipment possessing capabilities such as fault self-recovery, component overload protection, and long-term thermal stability is truly suitable for undertaking the full-chain research and development tasks from material screening to life validation, becoming a reliable "automated experimental partner" for scientific researchers.

Advantages of All-in-one testing equipment—NEWARE WHW-25L as an example

Traditional high and low temperature battery testing processes suffer from three major pain points: equipment fragmentation, space occupation, and data coordination difficulties. Researchers must use charge-discharge testing cabinets in combination with separate environmental chambers, which not only occupies twice the laboratory space but also faces issues such as cross-device compatibility, temperature control lag, and data alignment errors.

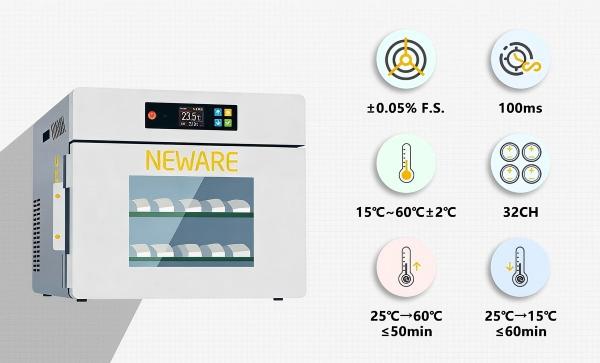

The emergence of the NEWARE WHW-25L 32CH Constant Temperature Integrated Testing Machine, along with various integrated high and low temperature testing equipment, represents a highly integrated innovation over this traditional paradigm.

Figure 7 introduction diagram of the NEWARE 25L constant temperature integrated testing machine

The advantages of All-in-one testing equipment

Deep functional integration and high space utilization. The WHW-25L occupies a total floor area of less than 0.5 square meters. Its 32 high-density test channels support the simultaneous and independent operation of 32 coin cells, providing efficient batch processing capabilities for material screening, cycle stability evaluation, and quality control.

Temperature control precision and stability. Intelligent algorithms dynamically adjust the heating/cooling modules, with a temperature range of 15℃ to 60℃. Under no-load conditions, the temperature deviation is ±2.0℃ with fluctuation ≤1℃. The underlying technical logic of its precision control has laid the foundation for future expansion to wider temperature range models (high and low temperature). The temperature uniformity within the chamber is excellent, ensuring highly reproducible test data.

Intelligent operation and high safety. The WHW-25L is equipped with a touch screen LCD and graphical interface, featuring infrared human sensing interaction. In terms of safety, the equipment integrates a three-tier protection mechanism comprising power-off data protection, short-circuit protection, and real-time circulation fan monitoring, supporting stable long-term testing lasting thousands of hours.

Expandable compatibility. It supports multiple specialized battery testing modes. The system allows DBC file import and editing, adapting to various communication protocols. It also supports cutting-edge material research and development, including solid-state batteries and novel electrolyte materials.

The importance and significance of high and low temperature testing

The importance of high and low temperature testing is rising exponentially with the three-dimensional expansion of battery application scenarios.

From ground to sky: Low-altitude economy and commercial aerospace have become core growth poles for wide-temperature-range batteries. By the end of 2025, China had approximately 800 commercial satellites in orbit. Future low Earth orbit satellite constellation networking will generate explosive demand for space energy storage batteries with operating temperature ranges covering -120℃ to +120℃. Batteries that have not undergone extreme vacuum, thermal cycling, and high-energy particle radiation environment simulation testing cannot obtain the "ticket" to enter space.

From a single technology pathway to a diversified landscape: Sodium-ion batteries, leveraging their excellent low-temperature performance, are rapidly penetrating into sectors such as two-wheeled electric vehicles, energy storage, and specialty vehicles. Solid-state batteries, balancing high energy density with enhanced safety, have initiated small-scale vehicle trials in 2026 and are expected to enter large-scale commercialization around 2030. Different technological pathways exhibit varying responses to temperature, necessitating tailored testing standards—sodium-ion batteries require focused validation of discharge retention rates below -40℃, while solid-state batteries demand greater attention to high-temperature interface stability. High and low temperature testing equipment serves as the common foundation supporting the parallel development of this diversified technological ecosystem.

Figure 8 CATL's sodium-ion mass-produced electric vehicle battery (Naxtra)

From passive verification to active definition: In the past, testing served as the "final checkpoint" before product finalization; in the future, testing will be deeply embedded throughout the entire process of material research, cell design, and system integration. Taking AI-driven materials research as an example, massive amounts of high-confidence high and low temperature testing data serve as the "fuel" for training electrochemical models and predicting the performance of new materials. Those who possess higher throughput, higher precision, and more standardized high and low temperature testing capabilities will hold the "defining power" for battery technology iteration.

The deeper significance of high and low temperature testing

High and low temperature testing is no longer an "optional accessory" in the battery research and development process; it has become core infrastructure concerning product safety baselines, user experience commitments, and the pace of technological iteration. From -70℃ extreme cold simulation chambers to highly integrated desktop-level integrated testing machines like the NEWARE WHW-25L, every advancement in equipment shortens the time for a new battery technology to transition from research papers to mass production, from the laboratory to the polar regions, outer space, and your immediate surroundings. In 2026, an era where battery technologies "blossom in diversity and scenarios reign supreme," the depth and breadth of high and low temperature testing capabilities are emerging as an implicit measure of a nation's competitiveness in the battery industry.